!python --versionPython 3.7.15Tabular Temporal-Difference Prediction

Kobus Esterhuysen

July 19, 2022

So far, we always had a model for the environment. This model came in the form of transition probabilities. It is often the case in the real world that we do not have these probabilties. We simply get individual experiences of next state and reward, given we take a specific action in a specific state. However, we still need a way to obtain the optimal value function or the optimal policy. There are algorithms available for this need. We have now entered the subfield of Reinforcement Learning. When we have a model (as in previous parts) the subfield is called Dynamic Programming or Approximate Dynamic Programming.

Note that we can use Reinforcement Learning even if we may have the option to obtain a model. Sometimes the state space is so large that obtaining a model is hard or the computational aspects become intractable.

Let us repeat some points related to prediction: - prediction is the problem of estimating the value function of an MDP given a policy

In this project we choose to work with MRPs, rather than MDPs, depending on the latter point. The relationship with the MRP environment is such that the: - Environment is available as an interface that serves up individual experiences of (next state, reward), given a current state. Note the absence of an action. - Environment might the real or simulated.

We define the agent’s experience with the environment as follows: - atomic experience - agent receives a single experience of (next state, reward), given current state - trace experience - starting from state

The RL prediction problem is the estimate the value function, given a stream of atomic experiences or a stream of trace experiences.

An MRP’s value function is:

for all

where the return

This infinite sum is true even if a trace experience terminates, say at

We make use of the approach and code used in http://web.stanford.edu/class/cme241/.

Let us setup our Inventory problem again:

Next we look at how we want to implement the simulation of experiences as stated above in code. The essential element is the TransitionStep:

@dataclass(frozen=True)

class TransitionStep(Generic[S]): #. s -> s'r (atomic experience)

state: NonTerminal[S]

next_state: State[S]

reward: float

def add_return(self, γ: float, return_: float) -> ReturnStep[S]:

return ReturnStep( #. s -> s'r

self.state,

self.next_state,

self.reward,

return_=self.reward + γ*return_

)A TransitionStep instance captures an atomic experience. It carries the state, next_state, and reward information. The add_return method allows for the incorporation of return values, making use of the class ReturnStep:

In general, the input to an RL prediction algorithm will be either: - a stream/sequence of atomic experiences - Iterable[TransitionStep[S]] - a stream/sequence of trace experiences - Iterable[Iterable[TransitionStep[S]]]

As before, we make use of the class MarkovRewardProcess:

class MarkovRewardProcess(MarkovProcess[S]):

#. transition from this state

def transition(self, state: NonTerminal[S]) -> Distribution[State[S]]: #s'|s or s->s'

distribution = self.transition_reward(state)

def next_state(distribution=distribution):

next_s, _ = distribution.sample() #.ignores reward

return next_s

return SampledDistribution(next_state)

@abstractmethod

def transition_reward(#. transition from this state

self,

state: NonTerminal[S]

) -> Distribution[Tuple[State[S], float]]: #. s'r|s or s->s'r

pass

#. future: simulate_transition

#. 's'imulate for 's'tep-generator

def simulate_reward( #. 'reward' for MarkovRewardProcess?

self,

start_state_distribution: Distribution[NonTerminal[S]]

) -> Iterable[TransitionStep[S]]: #. sequence of atomic experiences

state: State[S] = start_state_distribution.sample()

reward: float = 0.

while isinstance(state, NonTerminal):

next_distribution = self.transition_reward(state)

next_state, reward = next_distribution.sample()

yield TransitionStep(state, next_state, reward) # s -> s'r

state = next_state

#. 't'races for 't'race-generator

def reward_traces( #. 'reward' for MarkovRewardProcess?

self,

start_state_distribution: Distribution[NonTerminal[S]]

) -> Iterable[Iterable[TransitionStep[S]]]: #. sequence of trace experiences

while True:

yield self.simulate_reward(start_state_distribution)Our current focus is on the methods: - simulate_reward() - operates as a step (atomic experience) generator - yields a sequence of (state, next state, reward) 3-tuples, i.e. a sequence of atomic experiences - reward_traces() - operates as a trace (trace experience) generator - yields a sequence of trace experiences, each trace yielding a sequence of (state, next state, reward) atomic experiences - picks a start state start_state_distribution

[NonTerminal(state=InventoryState(on_hand=0, on_order=0)),

NonTerminal(state=InventoryState(on_hand=0, on_order=1)),

NonTerminal(state=InventoryState(on_hand=0, on_order=2)),

NonTerminal(state=InventoryState(on_hand=1, on_order=0)),

NonTerminal(state=InventoryState(on_hand=1, on_order=1)),

NonTerminal(state=InventoryState(on_hand=2, on_order=0))]{NonTerminal(state=InventoryState(on_hand=0, on_order=0)): 0.16666666666666666, NonTerminal(state=InventoryState(on_hand=0, on_order=1)): 0.16666666666666666, NonTerminal(state=InventoryState(on_hand=0, on_order=2)): 0.16666666666666666, NonTerminal(state=InventoryState(on_hand=1, on_order=0)): 0.16666666666666666, NonTerminal(state=InventoryState(on_hand=1, on_order=1)): 0.16666666666666666, NonTerminal(state=InventoryState(on_hand=2, on_order=0)): 0.16666666666666666}TransitionStep(state=NonTerminal(state=InventoryState(on_hand=0, on_order=2)), next_state=NonTerminal(state=InventoryState(on_hand=2, on_order=0)), reward=-0.0)#

# this trace generator (reward_traces()) will generate 3 atomic generators (simulate_reward())

[atom_gen for atom_gen in it.islice(si_mrp.reward_traces(ssd), n_traces)][<generator object MarkovRewardProcess.simulate_reward at 0x7faf46c59750>,

<generator object MarkovRewardProcess.simulate_reward at 0x7faf46c596d0>,

<generator object MarkovRewardProcess.simulate_reward at 0x7faf46c597d0>]<generator object MarkovRewardProcess.reward_traces at 0x7faf46c599d0>[[TransitionStep(state=NonTerminal(state=InventoryState(on_hand=0, on_order=2)), next_state=NonTerminal(state=InventoryState(on_hand=2, on_order=0)), reward=-0.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=2, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), reward=-2.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), reward=-4.678794411714423),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=1, on_order=1)), reward=-0.0)],

[TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), reward=-1.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), reward=-4.678794411714423),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), reward=-3.6787944117144233),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), reward=-3.6787944117144233)],

[TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=0)), reward=-2.0363832351432696),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=0, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=2)), reward=-10.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=0, on_order=2)), next_state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), reward=-0.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), reward=-4.678794411714423)]]With TAB TD, an update is made to the value of the Value Function every time we transition from a state

Let us consider again the Value Function update for MC (Monte-Carlo) Prediction in the TAB case:

To move from MC to TD we take advantage of the recursive structure of the Value Function in the MRP Bellman equation. We do this by forming an estimate for

where the

Practical advantages of TD: - it can be used with incomplete trace experiences when - experiment is disrupted - experiment is curtailed - it can be used with continuing trace experiences when a terminal state is never reached - it learns after each atomic experience, called continuous learning (unlike MC which only learns after a trace experience) - it can be used with any steam of atomic experiences regardless whether an atomic experience is part of a trace experience; atomic experiences can even be shuffled

With FAP TD, an update is made to the parameters of the Value Function every time we transition from a state

Let us consider again the loss function for MC Prediction:

To move from MC to TD we do the same replacement, i.e. we replace

This leads to the parameter update:

where we, instead of taking the fradient of the loss function, we “cheat” by ignoring the depencency of

This resembles the technique of replacing

The parameter update can again be broken down into 3 factors: - step size or learning rate which is

The function td_prediction() provides this prediction:

def td_prediction(

transitions: Iterable[mp.TransitionStep[S]],#. stream of transition experiences

approx_0: ValueFunctionApprox[S],

γ: float

) -> Iterator[ValueFunctionApprox[S]]:

def step(

v: ValueFunctionApprox[S],

transition: mp.TransitionStep[S]

) -> ValueFunctionApprox[S]:

return v.update([(

transition.state,

transition.reward + γ*extended_vf(v, transition.next_state)

)])

return iterate.accumulate(transitions, step, initial=approx_0)The inputs to td_prediction() are: - transitions: Iterable[mp.TransitionStep[S]] - stream of transition experiences

To prepare the stream of transition experiences we make use of three helper functions: - unit_experiences_from _episodes() - fmrp_episodes_stream() - mrp_episodes_stream()

def mrp_episodes_stream(

mrp: MarkovRewardProcess[S],

start_state_distribution: NTStateDistribution[S]

) -> Iterable[Iterable[TransitionStep[S]]]:

return mrp.reward_traces(start_state_distribution)

def fmrp_episodes_stream(

fmrp: FiniteMarkovRewardProcess[S]

) -> Iterable[Iterable[TransitionStep[S]]]:

return mrp_episodes_stream(fmrp, Choose(fmrp.non_terminal_states))

def unit_experiences_from_episodes(

episodes: Iterable[Iterable[TransitionStep[S]]],

episode_length: int

) -> Iterable[TransitionStep[S]]:

return it.chain.from_iterable(

it.islice(episode, episode_length) for episode in episodes

)Let us see how they behave.

<generator object MarkovRewardProcess.reward_traces at 0x7faf46c59850>[<generator object MarkovRewardProcess.simulate_reward at 0x7faf46c59cd0>,

<generator object MarkovRewardProcess.simulate_reward at 0x7faf46c59550>]<generator object MarkovRewardProcess.reward_traces at 0x7faf46c59f50>[<generator object MarkovRewardProcess.simulate_reward at 0x7faf46c59d50>,

<generator object MarkovRewardProcess.simulate_reward at 0x7faf46c59450>]initial_vf_dict: Mapping[NonTerminal[InventoryState], float] = \

{s: 0. for s in si_mrp.non_terminal_states}

initial_vf_dict{NonTerminal(state=InventoryState(on_hand=0, on_order=0)): 0.0,

NonTerminal(state=InventoryState(on_hand=0, on_order=1)): 0.0,

NonTerminal(state=InventoryState(on_hand=0, on_order=2)): 0.0,

NonTerminal(state=InventoryState(on_hand=1, on_order=0)): 0.0,

NonTerminal(state=InventoryState(on_hand=1, on_order=1)): 0.0,

NonTerminal(state=InventoryState(on_hand=2, on_order=0)): 0.0}n_traces = 2

n_atoms = 4

some_traces = [list(it.islice(trace, n_atoms)) for trace in it.islice(traces, n_traces)]; some_traces[[TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), reward=-1.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), reward=-4.678794411714423),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), reward=-3.6787944117144233),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=1, on_order=1)), reward=-0.0)],

[TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=1, on_order=1)), reward=-1.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=2, on_order=0)), reward=-1.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=2, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), reward=-2.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), reward=-4.678794411714423)]]transition_experiences = list(unit_experiences_from_episodes(episodes=some_traces, episode_length=4))

transition_experiences[TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), reward=-1.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), reward=-4.678794411714423),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), reward=-3.6787944117144233),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=1, on_order=1)), reward=-0.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=1, on_order=1)), reward=-1.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=1)), next_state=NonTerminal(state=InventoryState(on_hand=2, on_order=0)), reward=-1.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=2, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), reward=-2.0),

TransitionStep(state=NonTerminal(state=InventoryState(on_hand=1, on_order=0)), next_state=NonTerminal(state=InventoryState(on_hand=0, on_order=1)), reward=-4.678794411714423)]The updates are accumulated over all the input transitions making use of the update methods of FunctionApprox class. The update of the ValueFunctionApprox is done after each transition experience. The iterate.accumulate function is used which is a wrapper around the itertools.accumulate function. The function governing the accumulation is the step function inside the td_prediction function.

Let us investigate how the accumulate() function in the itertools module works.

Multiply

<itertools.accumulate at 0x7faf461a8460>Max

Let us now focus on the essential part of this project. We create an instance of the MRP.

This is the exact value function:

{NonTerminal(state=InventoryState(on_hand=0, on_order=0)): -35.511,

NonTerminal(state=InventoryState(on_hand=0, on_order=2)): -28.345,

NonTerminal(state=InventoryState(on_hand=0, on_order=1)): -27.932,

NonTerminal(state=InventoryState(on_hand=1, on_order=0)): -28.932,

NonTerminal(state=InventoryState(on_hand=1, on_order=1)): -29.345,

NonTerminal(state=InventoryState(on_hand=2, on_order=0)): -30.345}Now we perform a Temporal-Difference Prediction. First, we generate a stream of transition experiences:

<generator object MarkovRewardProcess.reward_traces at 0x7faf46cd6250>transitions: Iterable[TransitionStep[S]] = \

unit_experiences_from_episodes(episodes, episode_length)

transitions<itertools.chain at 0x7faf462029d0>Here are the value function approximations:

vfas: Iterator[ValueFunctionApprox[InventoryState]] = \

td_prediction(

transitions=transitions,

# approx_0=Tabular(values_map=initial_vf_dict),

approx_0=Tabular(count_to_weight_func=learning_rate_func),

γ=item_gamma,

# episode_length_tolerance=1e-6

)

vfas<itertools.accumulate at 0x7faf46212230>CPU times: user 1min 3s, sys: 2.05 s, total: 1min 5s

Wall time: 1min 7sCPU times: user 31.1 ms, sys: 997 µs, total: 32.1 ms

Wall time: 32 msTabular(values_map={NonTerminal(state=InventoryState(on_hand=0, on_order=1)): -27.972185110983187, NonTerminal(state=InventoryState(on_hand=1, on_order=1)): -29.3505025445315, NonTerminal(state=InventoryState(on_hand=0, on_order=0)): -35.53250116986358, NonTerminal(state=InventoryState(on_hand=0, on_order=2)): -28.399420194203877, NonTerminal(state=InventoryState(on_hand=2, on_order=0)): -30.495176822532102, NonTerminal(state=InventoryState(on_hand=1, on_order=0)): -28.930150363990364}, counts_map={NonTerminal(state=InventoryState(on_hand=0, on_order=1)): 276663, NonTerminal(state=InventoryState(on_hand=1, on_order=1)): 162022, NonTerminal(state=InventoryState(on_hand=0, on_order=0)): 117273, NonTerminal(state=InventoryState(on_hand=0, on_order=2)): 117683, NonTerminal(state=InventoryState(on_hand=2, on_order=0)): 163210, NonTerminal(state=InventoryState(on_hand=1, on_order=0)): 163148}, count_to_weight_func=<function learning_rate_schedule.<locals>.lr_func at 0x7faf46204830>)After Temporal-Difference:

{NonTerminal(state=InventoryState(on_hand=0, on_order=0)): -35.533,

NonTerminal(state=InventoryState(on_hand=0, on_order=2)): -28.399,

NonTerminal(state=InventoryState(on_hand=0, on_order=1)): -27.972,

NonTerminal(state=InventoryState(on_hand=1, on_order=0)): -28.93,

NonTerminal(state=InventoryState(on_hand=1, on_order=1)): -29.351,

NonTerminal(state=InventoryState(on_hand=2, on_order=0)): -30.495}Exact Value Function:

{NonTerminal(state=InventoryState(on_hand=0, on_order=0)): -35.511,

NonTerminal(state=InventoryState(on_hand=0, on_order=2)): -28.345,

NonTerminal(state=InventoryState(on_hand=0, on_order=1)): -27.932,

NonTerminal(state=InventoryState(on_hand=1, on_order=0)): -28.932,

NonTerminal(state=InventoryState(on_hand=1, on_order=1)): -29.345,

NonTerminal(state=InventoryState(on_hand=2, on_order=0)): -30.345}The comparison shows that, with 10,000 episodes, the Tabular Temporal-Difference Prediction comes quite close to the real values.

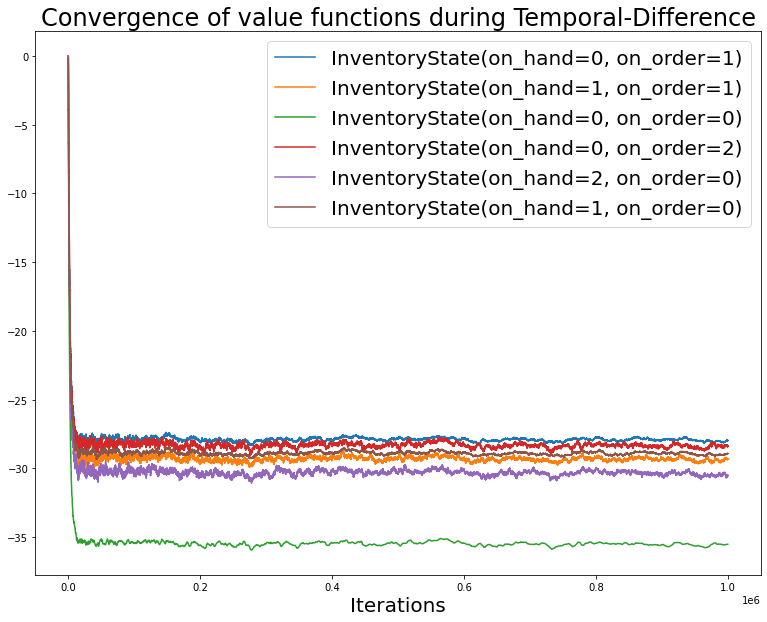

Let us visualize the convergence of the State Value Function for each of the states:

Tabular(values_map={NonTerminal(state=InventoryState(on_hand=0, on_order=1)): 0.0, NonTerminal(state=InventoryState(on_hand=1, on_order=1)): -0.06109149705429809, NonTerminal(state=InventoryState(on_hand=0, on_order=0)): -0.590854609034142, NonTerminal(state=InventoryState(on_hand=0, on_order=2)): 0.0, NonTerminal(state=InventoryState(on_hand=2, on_order=0)): -0.21529245146013765, NonTerminal(state=InventoryState(on_hand=1, on_order=0)): -0.14036383235143268}, counts_map={NonTerminal(state=InventoryState(on_hand=0, on_order=1)): 1, NonTerminal(state=InventoryState(on_hand=1, on_order=1)): 1, NonTerminal(state=InventoryState(on_hand=0, on_order=0)): 2, NonTerminal(state=InventoryState(on_hand=0, on_order=2)): 2, NonTerminal(state=InventoryState(on_hand=2, on_order=0)): 3, NonTerminal(state=InventoryState(on_hand=1, on_order=0)): 1}, count_to_weight_func=<function learning_rate_schedule.<locals>.lr_func at 0x7faf46204830>){NonTerminal(state=InventoryState(on_hand=0, on_order=1)): 0.0,

NonTerminal(state=InventoryState(on_hand=1, on_order=1)): -0.06109149705429809,

NonTerminal(state=InventoryState(on_hand=0, on_order=0)): -0.590854609034142,

NonTerminal(state=InventoryState(on_hand=0, on_order=2)): 0.0,

NonTerminal(state=InventoryState(on_hand=2, on_order=0)): -0.21529245146013765,

NonTerminal(state=InventoryState(on_hand=1, on_order=0)): -0.14036383235143268}{NonTerminal(state=InventoryState(on_hand=0, on_order=1)): 1,

NonTerminal(state=InventoryState(on_hand=1, on_order=1)): 1,

NonTerminal(state=InventoryState(on_hand=0, on_order=0)): 2,

NonTerminal(state=InventoryState(on_hand=0, on_order=2)): 2,

NonTerminal(state=InventoryState(on_hand=2, on_order=0)): 3,

NonTerminal(state=InventoryState(on_hand=1, on_order=0)): 1}Let us visualize how the value function for each state converges during the operation of the Temporal-Difference algorithm.

import matplotlib.pyplot as plt

fig,axs = plt.subplots(figsize=(13,10))

axs.set_xlabel('Iterations', fontsize=20)

axs.set_title(f'Convergence of value functions during Temporal-Difference', fontsize=24)

for it in merged_dict.items():

axs.plot(it[1], label=f'{it[0].state}')

axs.legend(fontsize=20);

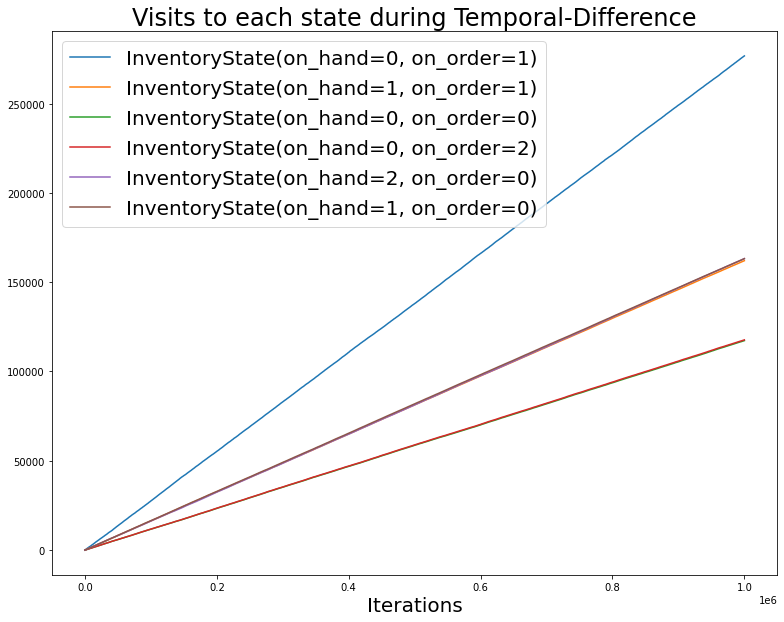

Next we visualize the number of visits for each state during the operation of the Monte-Carlo algorithm.

import matplotlib.pyplot as plt

fig,axs = plt.subplots(figsize=(13,10))

axs.set_xlabel('Iterations', fontsize=20)

axs.set_title(f'Visits to each state during Temporal-Difference', fontsize=24)

for it in merged_dict.items():

axs.plot(it[1], label=f'{it[0].state}')

axs.legend(fontsize=20);/usr/local/lib/python3.7/dist-packages/IPython/core/events.py:88: UserWarning: Creating legend with loc="best" can be slow with large amounts of data.

func(*args, **kwargs)

/usr/local/lib/python3.7/dist-packages/IPython/core/pylabtools.py:128: UserWarning: Creating legend with loc="best" can be slow with large amounts of data.

fig.canvas.print_figure(bytes_io, **kw)