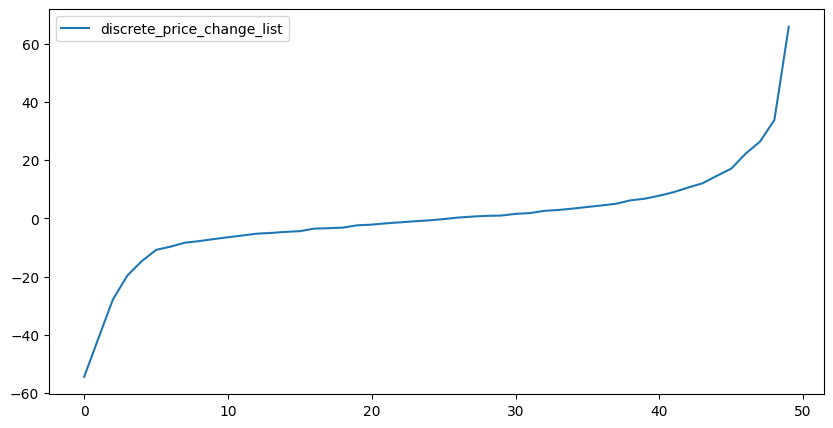

Starting BackwardDP 2D for price discretization with inc=1.0

State dimension: 2. State space size: 218. Exogenous info size: 50

self.discretePrices=array([ 0., 1., 2., 3., 4., 5., 6., 7., 8., 9., 10.,

11., 12., 13., 14., 15., 16., 17., 18., 19., 20., 21.,

22., 23., 24., 25., 26., 27., 28., 29., 30., 31., 32.,

33., 34., 35., 36., 37., 38., 39., 40., 41., 42., 43.,

44., 45., 46., 47., 48., 49., 50., 51., 52., 53., 54.,

55., 56., 57., 58., 59., 60., 61., 62., 63., 64., 65.,

66., 67., 68., 69., 70., 71., 72., 73., 74., 75., 76.,

77., 78., 79., 80., 81., 82., 83., 84., 85., 86., 87.,

88., 89., 90., 91., 92., 93., 94., 95., 96., 97., 98.,

99., 100., 101., 102., 103., 104., 105., 106., 107., 108.])

self.discreteEnergy=array([0., 1.])

Time_elapsed_2D_model=147.04 secs.

Starting policy evaluation for the actual sample path

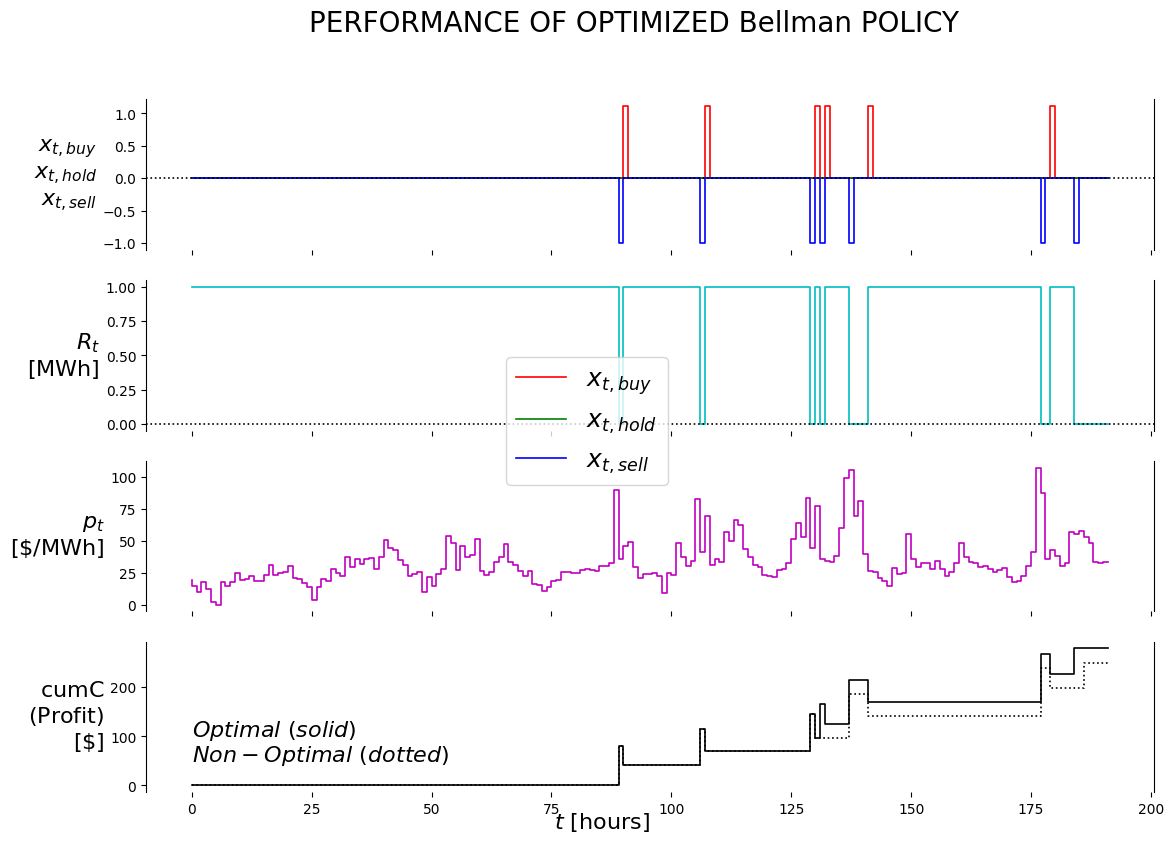

Finishing inc 1.0 with cumC 249.66. Best inc so far 1.0. Best contribution 249.66

Starting BackwardDP 2D for price discretization with inc=0.5

State dimension: 2. State space size: 432. Exogenous info size: 50

self.discretePrices=array([ 0. , 0.5, 1. , 1.5, 2. , 2.5, 3. , 3.5, 4. ,

4.5, 5. , 5.5, 6. , 6.5, 7. , 7.5, 8. , 8.5,

9. , 9.5, 10. , 10.5, 11. , 11.5, 12. , 12.5, 13. ,

13.5, 14. , 14.5, 15. , 15.5, 16. , 16.5, 17. , 17.5,

18. , 18.5, 19. , 19.5, 20. , 20.5, 21. , 21.5, 22. ,

22.5, 23. , 23.5, 24. , 24.5, 25. , 25.5, 26. , 26.5,

27. , 27.5, 28. , 28.5, 29. , 29.5, 30. , 30.5, 31. ,

31.5, 32. , 32.5, 33. , 33.5, 34. , 34.5, 35. , 35.5,

36. , 36.5, 37. , 37.5, 38. , 38.5, 39. , 39.5, 40. ,

40.5, 41. , 41.5, 42. , 42.5, 43. , 43.5, 44. , 44.5,

45. , 45.5, 46. , 46.5, 47. , 47.5, 48. , 48.5, 49. ,

49.5, 50. , 50.5, 51. , 51.5, 52. , 52.5, 53. , 53.5,

54. , 54.5, 55. , 55.5, 56. , 56.5, 57. , 57.5, 58. ,

58.5, 59. , 59.5, 60. , 60.5, 61. , 61.5, 62. , 62.5,

63. , 63.5, 64. , 64.5, 65. , 65.5, 66. , 66.5, 67. ,

67.5, 68. , 68.5, 69. , 69.5, 70. , 70.5, 71. , 71.5,

72. , 72.5, 73. , 73.5, 74. , 74.5, 75. , 75.5, 76. ,

76.5, 77. , 77.5, 78. , 78.5, 79. , 79.5, 80. , 80.5,

81. , 81.5, 82. , 82.5, 83. , 83.5, 84. , 84.5, 85. ,

85.5, 86. , 86.5, 87. , 87.5, 88. , 88.5, 89. , 89.5,

90. , 90.5, 91. , 91.5, 92. , 92.5, 93. , 93.5, 94. ,

94.5, 95. , 95.5, 96. , 96.5, 97. , 97.5, 98. , 98.5,

99. , 99.5, 100. , 100.5, 101. , 101.5, 102. , 102.5, 103. ,

103.5, 104. , 104.5, 105. , 105.5, 106. , 106.5, 107. , 107.5])

self.discreteEnergy=array([0., 1.])

Time_elapsed_2D_model=480.19 secs.

Starting policy evaluation for the actual sample path

Finishing inc 0.5 with cumC 278.75. Best inc so far 0.5. Best contribution 278.75

Finishing BellmanSearch in 0.95 secs

CPU times: user 10min 21s, sys: 1.17 s, total: 10min 23s

Wall time: 10min 29s